In 2017, , a professor of computer science at аТАФУХСљКЯВЪФкФЛаХЯЂ Davis, was on sabbatical as a fellow of the Collegium de Lyon in France. The institute brings together intellectuals, philosophers, artists and academics from all over the world to live together for one year to "think about great things."

He recalls a moment during this sabbatical year when he was describing his work on social network analysis, and a social scientist asked him if he had ever considered how his work was affecting people. It shifted Davidson's perspective in a major way.

"I took a step back. I had never really thought about the ramifications of my research," he said. "I was saying to the audience, 'Here's an algorithm that can run very efficiently and can work out which are the influential nodes in the network that can be knocked out and destroy the network.' We typically study problems in abstraction; we never talk about application. That was a wake-up call."

Davidson's research has evolved to investigate fair and explainable deep learning AI: Fair, as in how to make AI operate more fairly and with less bias; and explainable, as in once an AI can make fair decisions, how to program the AI to explain why the decision is trustworthy and fair.

One of his current projects attempts to teach fairness to AI. It is funded by a Google grant and an award by the National Science Foundation titled . Davidson is also investigating explainable AI in collaboration with the аТАФУХСљКЯВЪФкФЛаХЯЂ Davis Medical Center, with funding from the .

As with humans and the concepts of ethics, fairness and trustworthiness, trying to build these principles into AI is complex, complicated and not without its hurdles.

AI's (not really) biased tendencies

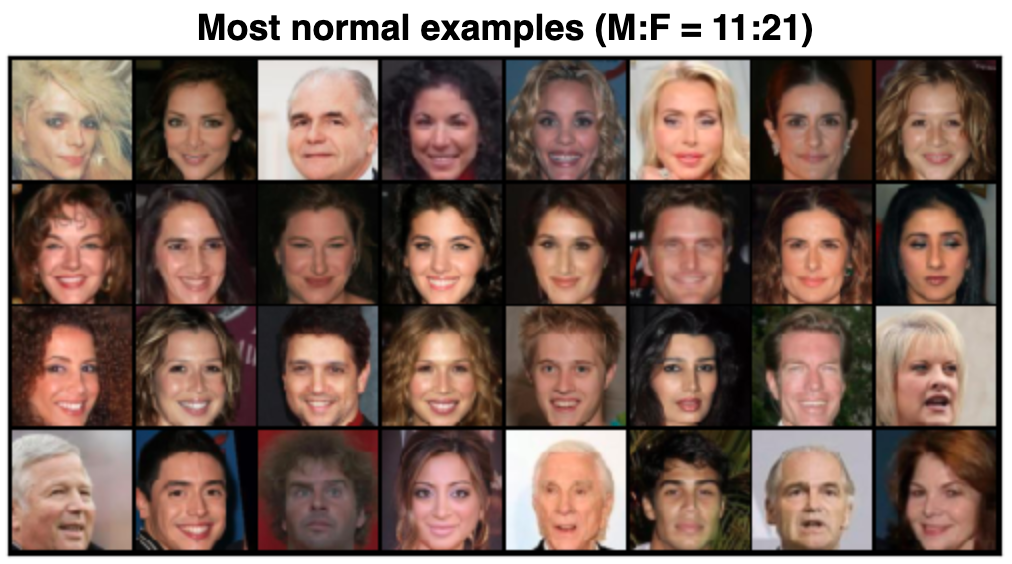

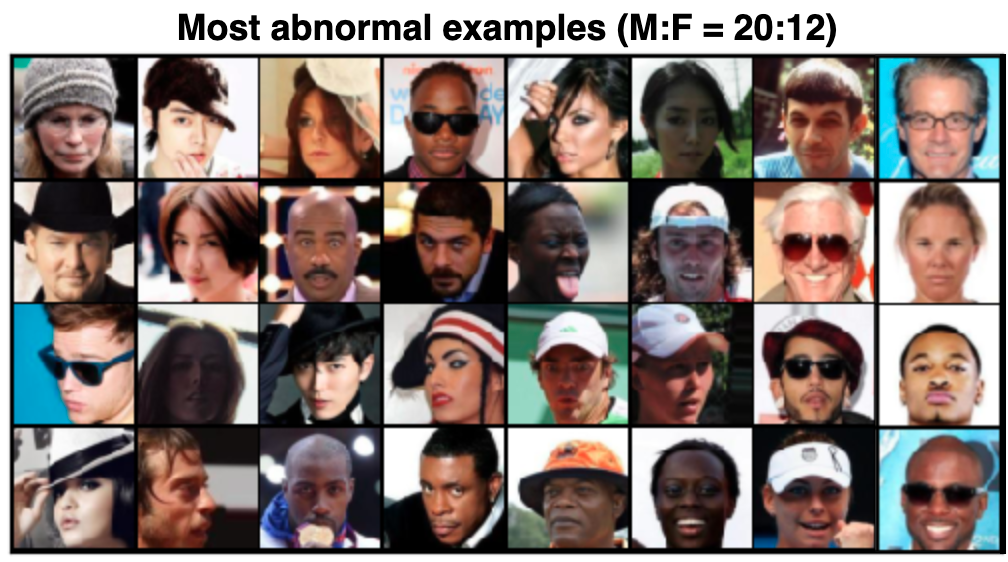

In 2021, Davidson and his team of researchers showed an algorithm a large selection of human faces and asked it to sort out the "unusual-looking people." The algorithm determined that "normal-looking people" were overwhelmingly blonde women and the "unusual-looking people" were largely Black, Asian or male. From a human standpoint, this appears to be based on bias.

"AI is obtained by teaching a machine how to replicate a task that a human would do, but when you do that, the machine knows nothing about our value system," Davidson said. "It will learn to make decisions that are correct in the sense that they are optimizing some sort of well-defined objective function. All these algorithms have a computation they're performing, and that computation has a very mathematical definition. When they optimize this, they make decisions that don't align with our ethics."

Earlier work by Davidson and other researchers addressed unfairness by specifically telling the machine how to be fair and who to be fair to. For example, in the previously mentioned project, the machine was instructed to distribute gender and ethnicity between the normal and unusual groups in accordance with their occurrence in the general population: half women, half men and 10% Black. However, that work only corrects known biases and could easily overlook other biases yet undiscovered.

In his research on AI fairness, Davidson prompted an algorithm to sort images of people into 'normal-looking' and 'unusual-looking' categories. Its normal group was made up of mostly white women, while the 'unusual' group comprised mostly Black, Asian or male. (Courtesy Ian Davidson)

With the funding from Google and the NSF, Davidson addresses this concern by exploring the complex task of how to teach fairness to a machine. This is achieved using self-supervised learning, in which the machine creates examples to teach itself, by generating contrastive examples.

For example, the machine may discover, without being told, that people wearing glasses are overwhelmingly labeled as being unusual. To combat this, the machine self-generates examples of people the machine believes are normal but with the addition of glasses as counterexamples to the stereotype that unusual people wear glasses.

Fair is fair: why AI just can't

One obstacle that Davidson deals with is the definition of fairness. The previously mentioned parameter, in which the distribution of gender and ethnicity is in accordance with the general population, is a legal definition of fairness established in California to ensure things like hiring and distribution of housing loans are equitable.

However, in conversations with philosophers both locally in California and overseas in Europe, Davidson was presented with multiple definitions of what being fair means.

"I was asking these philosophers, 'What about other definitions of fairness?' They were talking about collective fairness, justice and all sorts of definitions," he said. "They said, 'We can't agree upon it, but we can give you three, and they're all going to be at odds with one another.' I wanted a nice mathematical formula."

Based on their definitions of fairness, Davidson found that he could tell the AI to be fair to the group or the individual but not both. He could also instruct the AI to be fair to a group like gender or race, but not multiple groups like gender and race. One definition of fairness can be enforced, but enforcing multiple types of fairness is computationally impossible.

"You have to pick your poison," he said. "You can ask the machine to be fair with respect to group-level fairness or individual-level fairness. Now, you can try to do both, but the machine will take years and years to find a solution. It just can't be done efficiently."

The goal then becomes making AI fairer and as fair as possible. And once an AI is as fair as possible, ensuring its decisions can be trusted.

Explainable AI is trustworthy AI

AI, in certain forms, has become a societal normality with which many people are comfortable. The tailored pop-up ads on Instagram or the friend suggestions on Facebook, for instance, don't raise concerns about trustworthiness because they are not typically life changing.

Adopting AI in sectors like healthcare, where an algorithm may have a huge impact on life-altering treatments, is trickier.

With funding from the NIH, Davidson's lab and the аТАФУХСљКЯВЪФкФЛаХЯЂ Davis Medical Center are exploring teaching a functional MRI scanner, which measures brain activity by detecting changes in blood flow, to determine what type of treatment might work well on a young adult with schizophrenia based on how their brain scan compares to brain scans of previous patients.

To date, the machine performs with over 80% accuracy. However, hospitals and government oversight organizations like the NIH want to know not just that it works but also how it works. In other words, the machine needs to be able to explain how it came up with the result it came up with.

"Hospitals, the NIH, they want this black box that we deploy on patients to be explained so they can check it is based on sound principles," he said. "This explanation problem is really critical when machines are making life-changing decisions on humans."

From Davidson's perspective, AI and machines are only going to continue to integrate into society at large and be relied upon to make more and more important decisions in daily life. Soon, Davidson posits, AI may be responsible for school admissions, job hiring and even teaching basics like math to school-age children. The goal then is to make AI as trustworthy as possible.

"We have huge amounts of information coming in that has to be processed quickly," Davidson said. "Machines are going to have to take over and I would demand that they be trustworthy. We want them to align with what a human would do and can trust that their decisions are consistent with whatever culture or standards that we have."

Media Resources

Jessia Heath is a writer with the аТАФУХСљКЯВЪФкФЛаХЯЂ Davis College of Engineering, where this story was .